AI is being pushed heavily these days by companies like Google and Samsung, but did you know that your iPhone also uses AI in many ways you don’t even know about? Let’s talk about these features in detail.

1 Copy Text From Images and Videos via Live Text

Live Text lets you copy text from any image or video on your iPhone. It uses machine learning and image recognition to recognize handwritten and typed text in various languages, including Chinese, French, and German. You can also use it on your iPad or Mac, but it’s worth noting that you’ll need an iPhone Xs or later to use this feature.

To use Live Text, just open the Camera app, aim at the text you want to capture, and tap the Live Text button in the bottom-right corner of the viewfinder. From there, you can copy the text, translate it, or search for it online. Alternatively, you can use Live Text in the Photos app by opening a photo or video and long-pressing the text you want to select.

2 People and Pets Recognition in Photos

In the Photos app, you’ll find a neat feature that organizes people and your pets. This makes it super easy to find specific individuals in your photo collection. Apple heavily prioritizes privacy, so all the features mentioned in this article, including this one, use on-device processing for AI tasks, and no data is uploaded to Apple’s servers.

Just open the Photos app, and under the Album tab, scroll down and tap People & Pets. This will show you a grid of all the people who commonly appear in your gallery, and you can view all their photos just by tapping on their icon.

3 Photonic Engine and Night Mode

We all know iPhones have excellent cameras, but it’s not just because of the hardware; the underlying software also plays an important role.

Night mode is a feature that best demonstrates your iPhone’s software prowesss. In low-light conditions, when you click a photo with Night mode on, the camera captures multiple pictures at different exposures and then uses machine-learning algorithms to fuse them and highlight the best parts of every shot.

Another great example of your iPhone using AI in photography would be the Photonic Engine on the iPhone 14 Pro and newer models. It leverages the larger sensors and directly applies computational algorithms to uncompressed images to further improve low-light photos, leading to more detail and brighter colors.

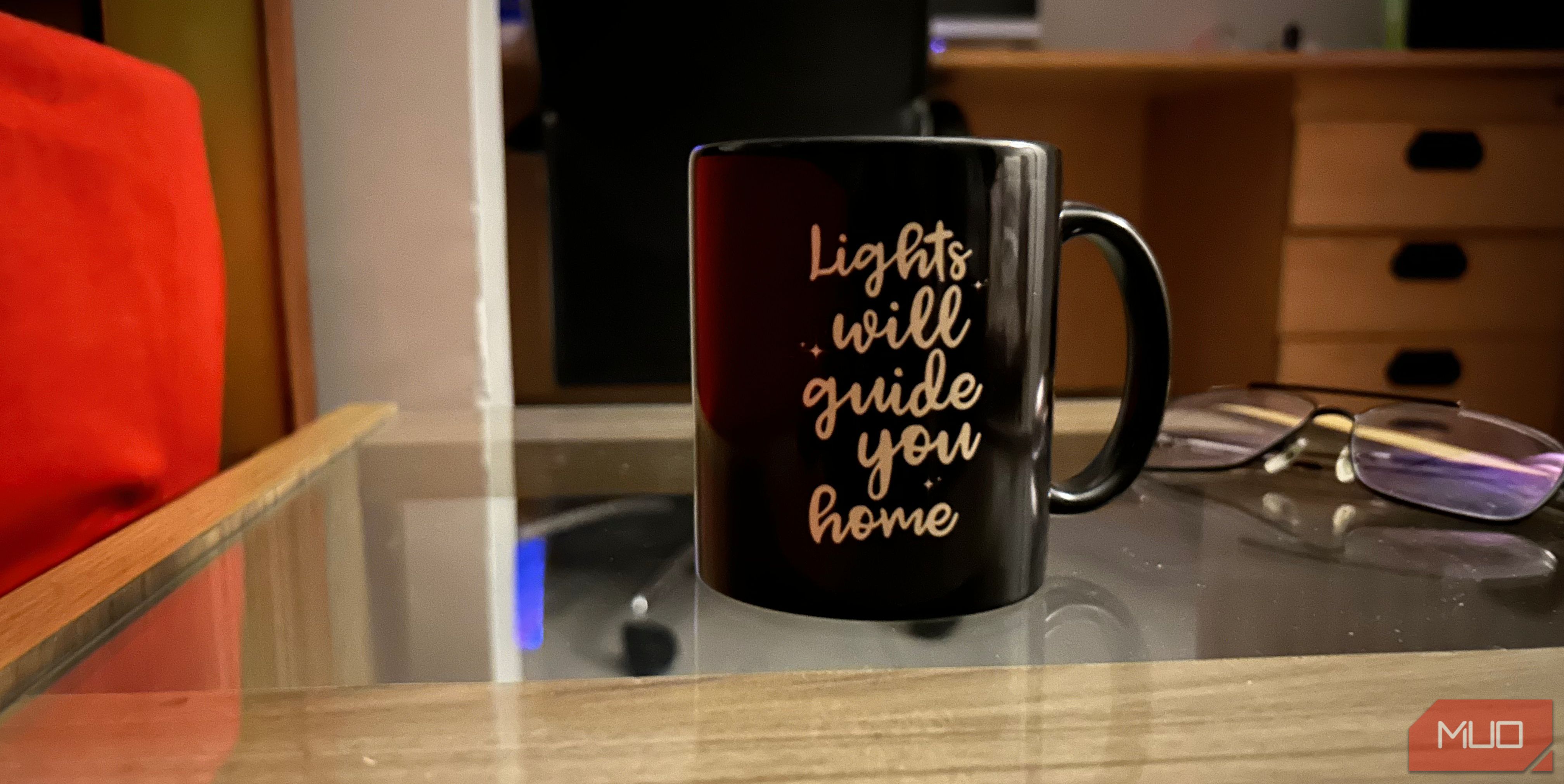

To show you how much of a difference AI and computational photography can make, look at the photo below, which I shot with Night mode enabled on my iPhone 14 with the Photonic Engine.

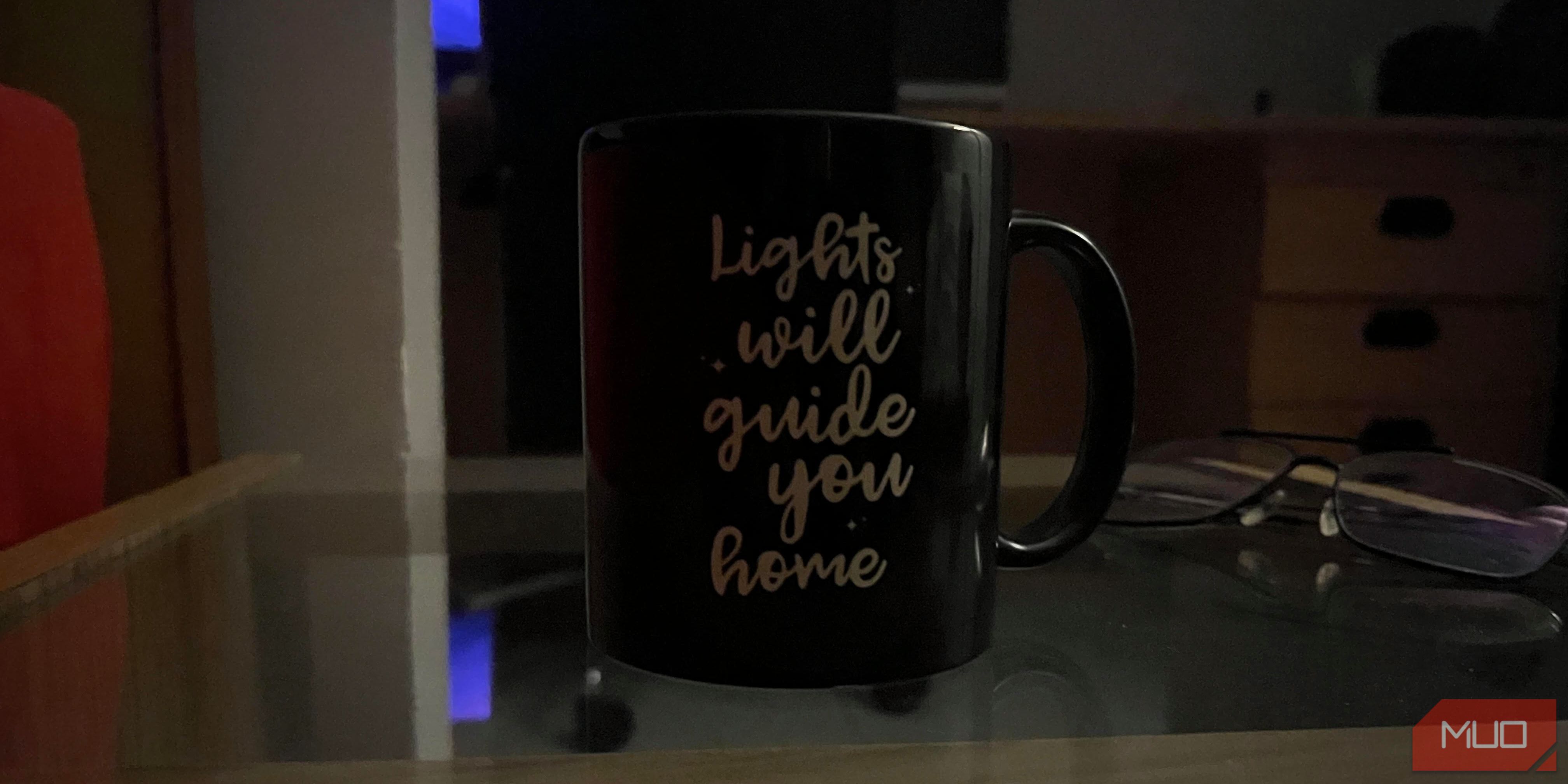

Now, here is a similar shot with Night mode disabled on an iPhone 13, which does not have the Photonic Engine.

Although both devices have slightly different sensors, all the major improvements you see are because of software. You can clearly notice the difference that the AI features make. The iPhone 14 shot is way brighter and has almost no noise despite being clicked in a dimly lit room.

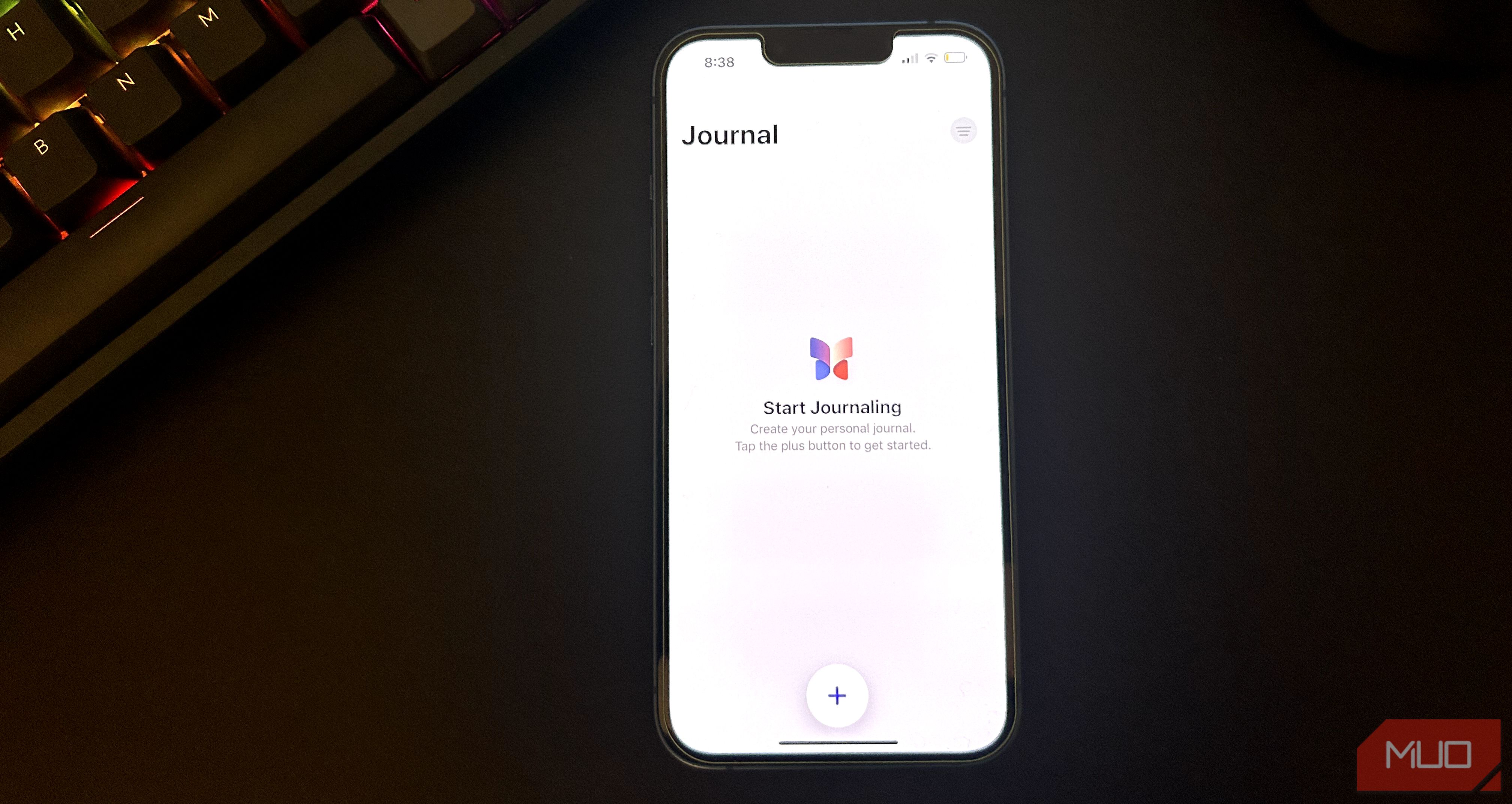

4 Personalized Suggestions in the Journal App

The Journal app is one of Apple’s first pushes towards improving your mental health. While the app may seem very basic to most users, with no traces of AI being implemented, a lot more is happening in the background.

The app utilizes on-device machine learning to analyze your recent activities, like your workouts, music preferences, and even the people you have been talking to. Based on this data, it provides personalized suggestions, attempting to anticipate your current moods and mental state.

Although all this data collection might sound like a privacy nightmare, Apple says all your entries are end-to-end encrypted. Since all the processing happens on-device, your data never leaves your iPhone.

5 Personal Voice

Your iPhone offers several accessibility features, and Personal Voice is among my top favorites. This feature serves individuals with cognitive conditions like ALS, where they may eventually lose their ability to speak in the future.

When activating the feature, you record around 15 minutes of audio. Overnight, your iPhone processes this data, generating a synthesized version of your voice that you can use wherever needed. It’s a remarkable demonstration of the Neural Engine’s power, showcasing the impressive capabilities of Apple’s on-device AI hardware.

Once set up, you can type to speak with your Personal Voice during FaceTime and iPhone calls.

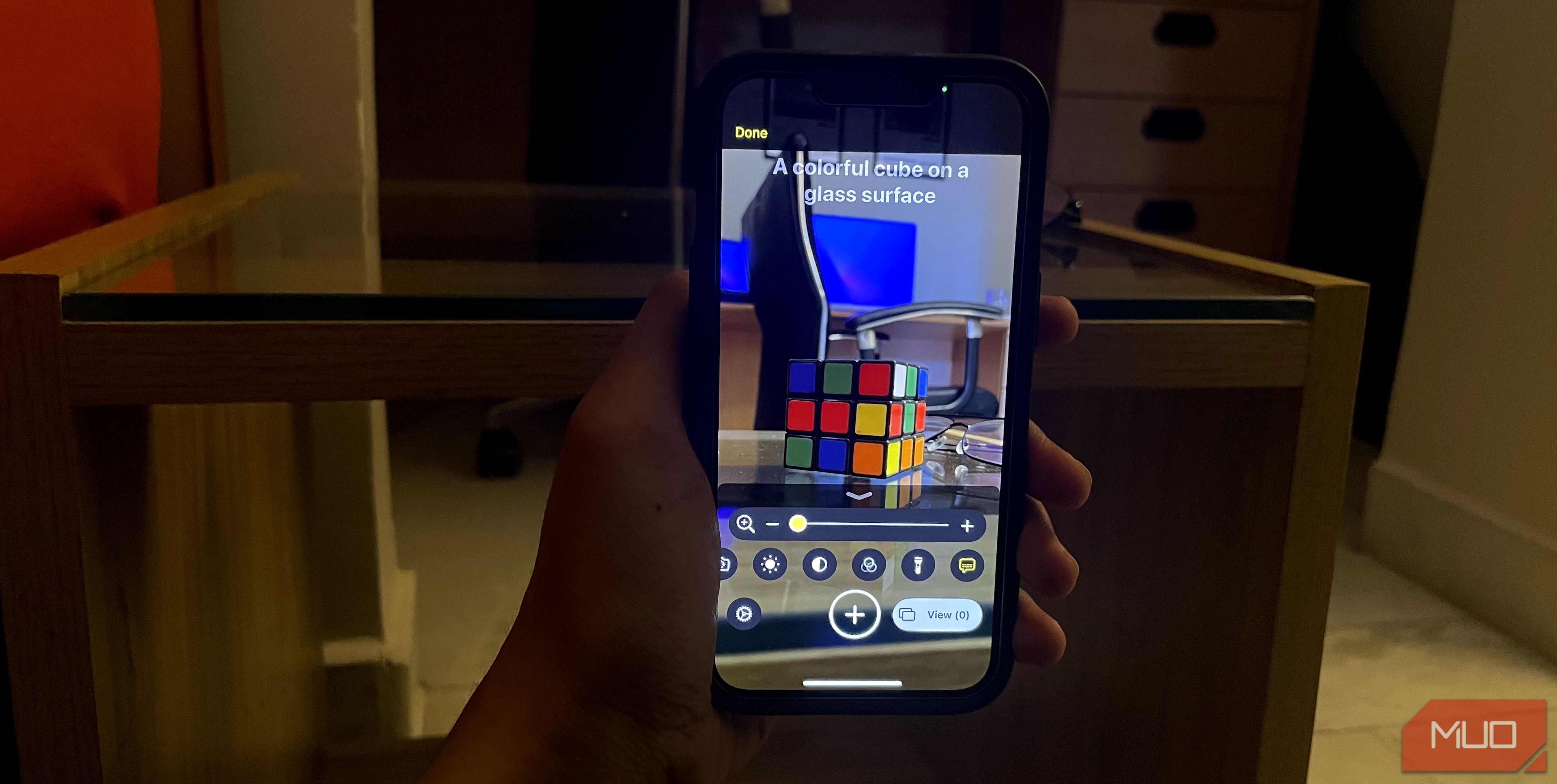

6 Image Descriptions

This is another accessibility that’s completely hidden on your iPhone but is super useful for people with impaired vision. If you can’t see images due to poor vision, you can use Image Descriptions with VoiceOver, and your iPhone will dictate to you what it sees in the photo.

The feature is not only limited to the Photos app, though, as you can also get real-time information by using the Camera app while having VoiceOver turned on. Alternatively, you can use the Magnifier app and enable the feature by tapping the Settings icon in the bottom-left corner and tapping the plus (+) button next to Image Descriptions.

7 Face ID

Face ID is often overlooked due to how seamlessly it unlocks your iPhone. But did you know it leverages the Apple Neural Engine to meticulously construct a detailed 3D map of your facial features?

This process involves the TrueDepth camera, which captures depth data by projecting and analyzing over 30,000 invisible infrared dots onto your face. These dots create a precise depth map, which is then used to form a comprehensive representation of your facial structure.

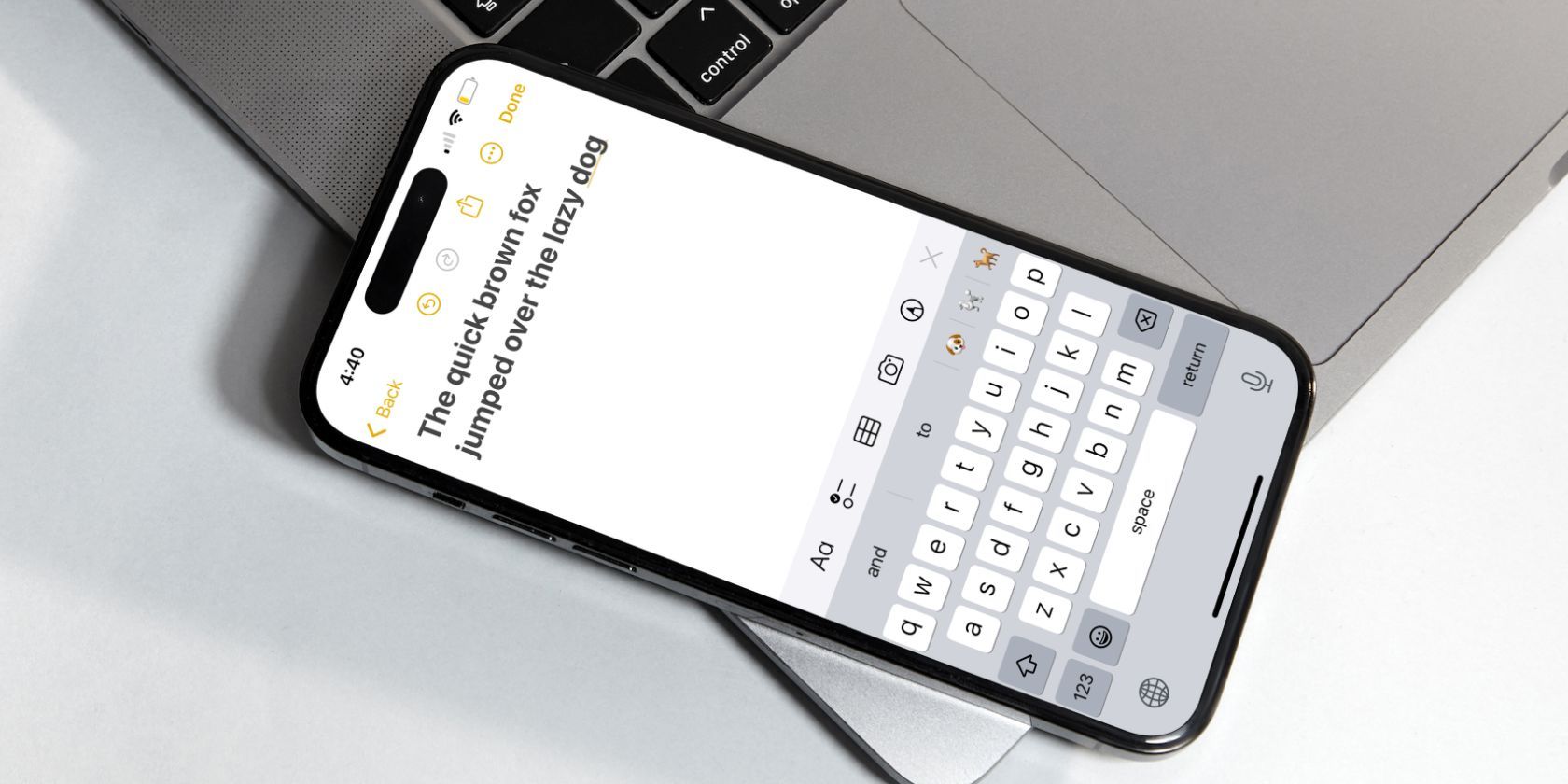

8 Predictive Text and Autocorrect

One of my biggest gripes with iPhones before iOS 17 was how messy autocorrect was compared to Android. Thankfully, it has improved a lot, as the iPhone keyboard now runs machine learning models every time you tap a key.

Another change that makes a big difference is that the keyboard is aware of the context of the sentence you are typing, giving you more accurate autocorrect options. Apple accomplished this by adopting a transformer language model, which uses neural networks to better understand relationships between the words in your sentences.

Also, don’t forget that iPhones use AI for the Predictive Text feature, which gives inline suggestions for auto-completing your sentences.

Your iPhone is way smarter than you might think, thanks to Apple’s quiet implementation of AI across various features. While we’ve covered some hidden tricks today, we expect Apple to announce a bunch of new AI features at the WWDC 2024 event.